Structural complexity theory

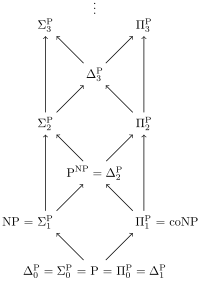

Structural complexity theory is a way of understanding and analyzing hard problems, like the most difficult math and computing problems.

Let's say you have a really hard math problem, like "what's the biggest number that can be expressed as the sum of two prime numbers?" This is a tough question, but it's not impossible to solve. In fact, a famous mathematician named Christian Goldbach came up with an idea to solve it way back in 1742. But what if instead of that question, we had a problem that was even harder? Something like "how can we quickly find the shortest path through a maze with a million twists and turns?"

Structural complexity theory helps us understand how hard these problems really are, and what it takes to solve them. It breaks down problems into smaller and smaller pieces, kind of like taking apart a puzzle. Each piece has its own complexity, which can be measured and understood.

So, for the maze problem, we might start by breaking it down into smaller mazes with fewer twists and turns. We can measure how hard these smaller mazes are to solve, and then build up to the big one. This helps us understand whether the problem is really hard, or if there might be a simpler way to solve it.

One key concept in structural complexity theory is "NP-complete" problems. These are problems that are really hard to solve, and don't have any known shortcuts. They're so hard that if you could solve any one of them quickly, you could solve all of them quickly. It's like a magic key that could open any lock. But because we haven't found that key yet, we have to use other methods to solve NP-complete problems.

In summary, structural complexity theory helps us understand and analyze hard problems by breaking them down into smaller pieces and measuring their complexity. This can help us find simpler ways to solve problems, or understand why some problems are just really, really hard.

Let's say you have a really hard math problem, like "what's the biggest number that can be expressed as the sum of two prime numbers?" This is a tough question, but it's not impossible to solve. In fact, a famous mathematician named Christian Goldbach came up with an idea to solve it way back in 1742. But what if instead of that question, we had a problem that was even harder? Something like "how can we quickly find the shortest path through a maze with a million twists and turns?"

Structural complexity theory helps us understand how hard these problems really are, and what it takes to solve them. It breaks down problems into smaller and smaller pieces, kind of like taking apart a puzzle. Each piece has its own complexity, which can be measured and understood.

So, for the maze problem, we might start by breaking it down into smaller mazes with fewer twists and turns. We can measure how hard these smaller mazes are to solve, and then build up to the big one. This helps us understand whether the problem is really hard, or if there might be a simpler way to solve it.

One key concept in structural complexity theory is "NP-complete" problems. These are problems that are really hard to solve, and don't have any known shortcuts. They're so hard that if you could solve any one of them quickly, you could solve all of them quickly. It's like a magic key that could open any lock. But because we haven't found that key yet, we have to use other methods to solve NP-complete problems.

In summary, structural complexity theory helps us understand and analyze hard problems by breaking them down into smaller pieces and measuring their complexity. This can help us find simpler ways to solve problems, or understand why some problems are just really, really hard.