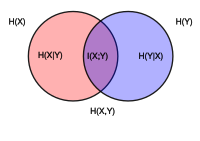

Conditional entropy

Conditional entropy is something that helps us figure out how much more information we need in order to understand something. It's like if you want to figure out how much more you need to learn about a topic, and you take a test to see how much you already know about the topic. If you take the test and don't get all the questions right, then the amount of information you need to learn more about the topic is the "conditional entropy."

Related topics others have asked about: