Information entropy

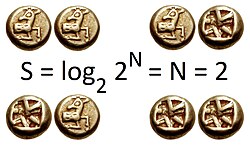

Information entropy is like a big jumble of pieces to a very big puzzle. It is a measure of how many different pieces of information you have in a group. For example, if you had 3 pieces of information, that would be low entropy, but if you had 10 pieces of information, that would be high entropy. Entropy helps us measure how much info is in something, and helps us figure out what pieces we need for the puzzle.

Related topics others have asked about: