F1 score

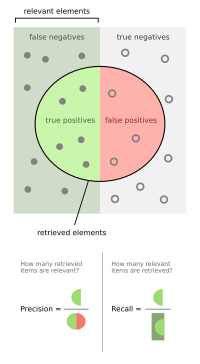

The F1 score is like a score out of 100 that tells you how good your model is at a given task. The F1 score looks at both precision and recall. Precision tells you how many of the things the model said were true, actually were true. Recall tells you how many of the true things the model was able to find. The F1 score balances precision and recall together so that you have a single number to look at that tells you how well your model did.

Related topics others have asked about: