History of computing

Computing refers to the process of solving a problem or performing a task with the use of machines. Computers are machines that help us do this more accurately, quickly and efficiently than if we did it by hand.

Computing dates back to the earliest times when humans used their fingers to count. Later on, we learned to devise ways to calculate mathematical problems using tools such as the abacus, a type of calculator.

The first true computer, however, was the famous “analytical engine” invented by Charles Babbage in 1837. Although it was never completely constructed, it was designed to operate on punch cards and used binary code, the basis for all computer languages today.

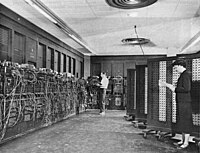

Fast forward to the 20th century, the first electronic computer called the “ENIAC” was invented in 1945 during World War II to calculate ballistics. It was a huge machine that weighed over 30 tons and contained 18,000 vacuum tubes. It was difficult to program and too expensive for most people to use.

In the 1960s, integrated circuits were introduced, making it possible to include a large number of components on one chip. This made computers smaller, cheaper and more accessible. It was around this time that the “ARPANET,” the predecessor to the internet, was created.

The 1970s saw the rise of personal computers (PCs) which were affordable and could be used by individuals in their homes. This was aided by the development of the first “microprocessors” which were small enough to fit inside a computer. Apple and IBM were among the first companies to produce PCs.

Fast forward to the present, computers are an integral part of our daily lives. We use them to communicate with each other, shop online, do research and much more. Computers have become smaller, faster and more powerful, allowing for greater innovation and productivity.

Computing dates back to the earliest times when humans used their fingers to count. Later on, we learned to devise ways to calculate mathematical problems using tools such as the abacus, a type of calculator.

The first true computer, however, was the famous “analytical engine” invented by Charles Babbage in 1837. Although it was never completely constructed, it was designed to operate on punch cards and used binary code, the basis for all computer languages today.

Fast forward to the 20th century, the first electronic computer called the “ENIAC” was invented in 1945 during World War II to calculate ballistics. It was a huge machine that weighed over 30 tons and contained 18,000 vacuum tubes. It was difficult to program and too expensive for most people to use.

In the 1960s, integrated circuits were introduced, making it possible to include a large number of components on one chip. This made computers smaller, cheaper and more accessible. It was around this time that the “ARPANET,” the predecessor to the internet, was created.

The 1970s saw the rise of personal computers (PCs) which were affordable and could be used by individuals in their homes. This was aided by the development of the first “microprocessors” which were small enough to fit inside a computer. Apple and IBM were among the first companies to produce PCs.

Fast forward to the present, computers are an integral part of our daily lives. We use them to communicate with each other, shop online, do research and much more. Computers have become smaller, faster and more powerful, allowing for greater innovation and productivity.

Related topics others have asked about:

Algorithm,

Charles Babbage Institute,

FLOPS,

History of computer hardware in Yugoslavia,

History of computing in Poland,

History of computing in the Soviet Union,

History of software,

IT History Society,

Moore's law,

Timeline of computing 2020–present,

Timeline of quantum computing and communication