Binary entropy function

Okay kiddo, let's talk about the binary entropy function!

Have you ever played a game where you had to guess if a coin would land on heads or tails? That's a lot like how the binary entropy function works.

In computer science, we use something called "bits" to store information. A bit can have two values: 0 or 1.

Now imagine you have a bunch of coins and you want to guess what the outcome will be for each one. Like guessing if it will be heads or tails. When you guess, you have a chance of being right, but you also have a chance of being wrong.

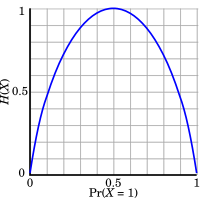

The binary entropy function helps us measure how uncertain we are about our guesses. If we're really unsure, the entropy is high. If we're pretty sure, the entropy is low.

So the binary entropy function takes in a probability value (like the chance of getting heads on a coin flip) and calculates the entropy. It's like a math helper that tells us how much we don't know, based on how likely different outcomes are.

Do you have any more questions about the binary entropy function?

Have you ever played a game where you had to guess if a coin would land on heads or tails? That's a lot like how the binary entropy function works.

In computer science, we use something called "bits" to store information. A bit can have two values: 0 or 1.

Now imagine you have a bunch of coins and you want to guess what the outcome will be for each one. Like guessing if it will be heads or tails. When you guess, you have a chance of being right, but you also have a chance of being wrong.

The binary entropy function helps us measure how uncertain we are about our guesses. If we're really unsure, the entropy is high. If we're pretty sure, the entropy is low.

So the binary entropy function takes in a probability value (like the chance of getting heads on a coin flip) and calculates the entropy. It's like a math helper that tells us how much we don't know, based on how likely different outcomes are.

Do you have any more questions about the binary entropy function?

Related topics others have asked about: