Markov text generators

Well kiddo, have you ever played with those magnetic letter toys on the fridge? You know, the ones where you can move the letters around to form different words and sentences? A Markov text generator is kinda like that, but for writing!

Instead of magnets, a Markov text generator uses a computer program to analyze a piece of writing (like a book, article, or even your favorite tweets) and figure out which words are most likely to follow each other in a sentence. Then, when you give a Markov text generator a starting word or phrase, it uses those patterns to "predict" what the next word or words should be, and continues building a sentence from there.

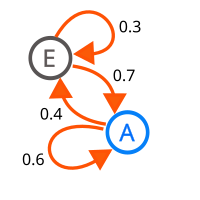

It's called a "Markov" generator because it uses something called a Markov chain – which is a fancy way of saying that it keeps track of the probability of one word following another. So if you give a Markov text generator the starting words "The cat", it might guess that the next word is "sat" because that's a common phrase. But if you give it the starting words "The cat climbed", it might guess that the next word is "up" because "climbed up" is another common phrase.

Markov text generators can be pretty fun to play with because they can create some pretty silly sentences that don't really make sense, but sometimes they can also sound eerily similar to something a real person might write. Some people even use Markov generators to create fake news articles or spam messages, which is why it's important to always be careful about what you read online.

Instead of magnets, a Markov text generator uses a computer program to analyze a piece of writing (like a book, article, or even your favorite tweets) and figure out which words are most likely to follow each other in a sentence. Then, when you give a Markov text generator a starting word or phrase, it uses those patterns to "predict" what the next word or words should be, and continues building a sentence from there.

It's called a "Markov" generator because it uses something called a Markov chain – which is a fancy way of saying that it keeps track of the probability of one word following another. So if you give a Markov text generator the starting words "The cat", it might guess that the next word is "sat" because that's a common phrase. But if you give it the starting words "The cat climbed", it might guess that the next word is "up" because "climbed up" is another common phrase.

Markov text generators can be pretty fun to play with because they can create some pretty silly sentences that don't really make sense, but sometimes they can also sound eerily similar to something a real person might write. Some people even use Markov generators to create fake news articles or spam messages, which is why it's important to always be careful about what you read online.

Related topics others have asked about: