History of computing hardware (1960s–present)

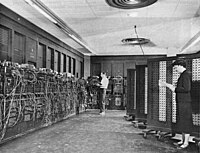

Computing hardware is the physical parts of a computer that allow it to operate and perform tasks. In the 1960s, computers were huge and expensive, and only governments, large corporations, and universities could afford them. They used vacuum tubes, which were like light bulbs, to perform calculations.

In the 1970s, smaller and less expensive computers were developed. They used integrated circuits, which allowed many transistors and other parts to be placed on a single chip. These were called microcomputers, and they paved the way for personal computers that people could use in their homes.

In the 1980s, the first personal computers were introduced. These were machines that people could buy and use for word processing, playing games, and other tasks. They used floppy disks to store data and were in demand because of their versatility.

In the 1990s, computers became even smaller and more powerful. Laptops were introduced, which made it possible for people to work and communicate from anywhere. Desktop computers became less expensive and more sophisticated, with better graphics and audio capabilities.

In the 2000s, computing hardware continued to evolve rapidly. Smartphones, which were small, portable devices that could perform many functions, began to become popular. They allowed people to be connected to the internet at all times and could stream audio and video.

Today, computing hardware continues to evolve rapidly. Devices like tablets, smartwatches, and virtual reality headsets are becoming more common. Computers are faster, smaller, and more powerful than ever, with the ability to store huge amounts of data and perform complex calculations in seconds.

In the 1970s, smaller and less expensive computers were developed. They used integrated circuits, which allowed many transistors and other parts to be placed on a single chip. These were called microcomputers, and they paved the way for personal computers that people could use in their homes.

In the 1980s, the first personal computers were introduced. These were machines that people could buy and use for word processing, playing games, and other tasks. They used floppy disks to store data and were in demand because of their versatility.

In the 1990s, computers became even smaller and more powerful. Laptops were introduced, which made it possible for people to work and communicate from anywhere. Desktop computers became less expensive and more sophisticated, with better graphics and audio capabilities.

In the 2000s, computing hardware continued to evolve rapidly. Smartphones, which were small, portable devices that could perform many functions, began to become popular. They allowed people to be connected to the internet at all times and could stream audio and video.

Today, computing hardware continues to evolve rapidly. Devices like tablets, smartwatches, and virtual reality headsets are becoming more common. Computers are faster, smaller, and more powerful than ever, with the ability to store huge amounts of data and perform complex calculations in seconds.

Related topics others have asked about: